Received:

April 28, 2021

Accepted:

May 27, 2022

Publication Date:

March 9, 2023

Copyright The Author(s). This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are cited.

Download Citation: ||https://doi.org/10.6180/jase.202311_26(11).0010

ABSTRACT

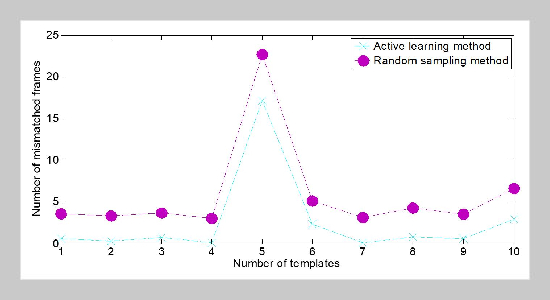

Since the receptive field of CNN usually reflects the size of its learning ability, it is limited by the size of the convolution kernel. At the same time, the use of pooling to increase the receptive field will cause the lack of spatial information of the feature map. While large receptive fields do not cause information loss, a deep multimodal fusion line-of-sight tracking model based on dilated convolution is proposed. Using dilated convolution to further improve Res Net-50, and through experiments, it is proved that the use of dilated convolution can further improve the performance of the model. Comparing the designed gaze tracking model with the CNN-based gaze tracking model shows the results of the superiority of the gaze tracking model. In order to minimize the number of manual interventions, this paper adopts an adaptive target tracking method to achieve automatic collection of training samples. Based on the idea of active learning, the learning algorithm selects the sample containing the most information from the input stream of the training sample (the matching confidence given by the nearest neighbor classifier is lower than the set threshold) for constructing the perceptual model. A feature that is invariant to changes in rotation, brightness, and contrast is selected as the target descriptor to enhance the discriminative ability of the perceptual model. The experimental results verify the effectiveness of the multi-modal interactive visual perception method.

Keywords:

interactive visual perception; human-computer interaction; deep multi-modal fusion; mixed reality

REFERENCES

- [1] N. Devi and K. Easwarakumar, (2017) “A clinical evaluation of human computer interaction using multi modal fusion techniques" Journal of Medical Imaging and Health Informatics 7(8): 1759–1766. DOI: 10.1166/jmihi.2017.2260.

- [2] A. Memo and P. Zanuttigh, (2018) “Head-mounted gesture controlled interface for human-computer interaction" Multimedia Tools and Applications 77(1): 27–53. DOI: 10.1007/s11042-016-4223-3.

- [3] R. Han, Z. Feng, J. Tian, X. Fan, X. Yang, and Q. Guo, (2020) “An intelligent navigation experimental system based on multi-mode fusion" Virtual Reality & Intelligent Hardware 2(4): 345–353. DOI: 10.1016/j.vrih.2020.07.007.

- [4] J. A. De Guzman, K. Thilakarathna, and A. Seneviratne, (2019) “Security and privacy approaches in mixed reality: A literature survey" ACM Computing Surveys (CSUR) 52(6): 1–37. DOI: 10.1145/3359626.

- [5] A. Karpov and R. Yusupov, (2018) “Multimodal interfaces of human–computer interaction" Herald of the Russian Academy of Sciences 88(1): 67–74. DOI: 10.1134/S1019331618010094.

- [6] A. Karpov and R. Yusupov, (2018) “Multimodal interfaces of human–computer interaction" Herald of the Russian Academy of Sciences 88(1): 67–74. DOI: 10.1134/S1019331618010094.

- [7] R. A. J. de Belen, H. Nguyen, D. Filonik, D. Del Favero, and T. Bednarz, (2019) “A systematic review of the current state of collaborative mixed reality technologies: 2013–2018" AIMS Electronics and Electrical Engineering 3(2): 181–223. DOI: 10.3934/ElectrEng.2019.2.181.

- [8] Q. Fu, J. Lv, et al., (2020) “Research on application of cognitive-driven human-computer interaction" American Academic Scientific Research Journal for Engineering, Technology, and Sciences 64(1): 9–27.

- [9] J. Yuan, Z. Feng, D. Dong, X. Meng, J. Meng, and D. Kong, (2020) “Research on multimodal perceptual navigational virtual and real fusion intelligent experiment equipment and algorithm" IEEE Access 8: 43375–43390. DOI: 10.1109/ACCESS.2020.2978089.

- [10] J. C. Wilson, S. Nair, S. Scielzo, and E. C. Larson, (2021) “Objective measures of cognitive load using deep multi-modal learning: A use-case in aviation" Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 5(1): 1–35.

- [11] R. A. J. de Belen, H. Nguyen, D. Filonik, D. Del Favero, and T. Bednarz, (2019) “A systematic review of the current state of collaborative mixed reality technologies: 2013–2018" AIMS Electronics and Electrical Engineering 3(2): 181–223. DOI: 10.3934/ElectrEng.2019.2.181.

- [12] A. W. Ismail and M. S. Sunar, (2017) “Multimodal fusion: progresses and issues for augmented reality environment" International Journal of Computational Vision and Robotics 7(3): 240–254. DOI: 10.1504/IJCVR.2017.083452.

- [13] C. L. Diaz, A. L. Iniguez-Carrillo, M. R. M. Arellano, V. M. Larios-Rosillo, E. Aceves, A. Ochoa, and E. Cossio, (2019) “" Interconnection" APP: Proposal of Interaction with a Virtual Agent, Animations and Augmented Reality an Easy Way to Learn the Usage of Sensors in Smart Cities." Res. Comput. Sci. 148(6): 153–164.

- [14] M. K. Al-Qaderi and A. B. Rad, (2018) “A braininspired multi-modal perceptual system for social robots: an experimental realization" IEEE Access 6: 35402–35424. DOI: 10.1109/ACCESS.2018.2851841.

- [15] X. Sheng, X. Ding, W. Guo, L. Hua, M. Wang, and X. Zhu, (2020) “Toward an integrated multi-modal sEMG/MMG/NIRS sensing system for human-machine interface robust to muscular fatigue" IEEE Sensors Journal 21(3): 3702–3712. DOI: 10.1109/JSEN.2020.3023742.

- [16] T. Luo, M. Zhang, Z. Pan, Z. Li, N. Cai, J. Miao, Y. Chen, and M. Xu, (2020) “Dream-experiment: a MR user interface with natural multi-channel interaction for virtual experiments" IEEE Transactions on Visualization and Computer Graphics 26(12): 3524–3534. DOI: 10.1109/TVCG.2020.3023602.

- [17] G. Du, M. Chen, C. Liu, B. Zhang, and P. Zhang, (2018) “Online robot teaching with natural human-robot interaction" IEEE Transactions on Industrial Electronics 65(12): 9571–9581. DOI: 10.1109/TIE.2018.2823667.

- [18] B. Lei, W. Li, Y. Yao, X. Jiang, E.-L. Tan, J. Qin, S. Chen, D. Ni, and T. Wang, (2017) “Multi-modal and multi-layout discriminative learning for placental maturity staging" Pattern Recognition 63: 719–730. DOI: 10.1016/j.patcog.2016.09.037.

- [19] G. Zhu, L. Zhang, P. Shen, and J. Song, (2017) “Multimodal gesture recognition using 3-D convolution and convolutional LSTM" Ieee Access 5: 4517–4524. DOI: 10.1109/ACCESS.2017.2684186.

- [20] Z. Zhang, Z. Li, M. Han, Z. Su, W. Li, and Z. Pan, (2021) “An augmented reality-based multimedia environment for experimental education" Multimedia Tools and Applications 80(1): 575–590. DOI: 10.1007/s11042-020-09684-x.