REFERENCES

- [1] WHOReport:Visualimpairmentandblindness(2014).

- [2] Araki, M., Shibahara, K. and Mizukami, Y., “Spoken Dialogue System for Learning Braille,” 35th IEEE Annual Computer Software and Applications Conference, pp. 152�156 (2011). doi: 10.1109/COMPSAC. 2011.27

- [3] Arshad, H., Khan, U. S. and Izhar, U., “MEMS Based Braille System,” 2015 IEEE 15th International Conference on Nanotechnology, pp. 1103�1106 (2015). doi: 10.1109/NANO.2015.7388815

- [4] Matsuda, Y., Isomura, T., Sakuma, I., Kobayashi, E., Jimbo, Y. and Arafune, T., “Finger Braille Teaching System for People who Communicate with Deafblind People,” Proceedings of the 2007 IEEE International Conference on Mechatronics and Automation, pp. 3202�3207 (2007). doi: 10.1109/ICMA.2007.4304074

- [5] Al-Shamma, S. D. and Fathi, S., “Arabic Braille Recognition and Transcription into Text and Voice,” 5th Cairo International Biomedical Engineering Conference Cairo, pp. 16�18 (2010). doi: 10.1109/CIBEC. 2010.5716095

- [6] Abdul Malik, S. A. S., Ali, E. Z., Yousef, A. S., Khaled, A. H. and Abdul Aziz, O. A. Q., “An Efficient Braille Cells Recognition,” 6th International Conference on Wireless Communications Networking and Mobile Computing (WICOM), pp. 1�4 (2010). doi: 10.1109/ WICOM.2010.5601020

- [7] Onur, K., “Braille-2 Otomatik Yorumlama Sistemi,” Signal Processing and Communications Applications Conference (SIU), pp. 1562�1565 (2015). doi: 10. 1109/SIU.2015.7130146

- [8] Velazquez, R., Preza, E. and Hernandez, H., “Making eBooks Accessible to Blind Braille Readers,”IEEE International Workshop on Haptic Audio Visual Environments and Their Applications, pp. 25�29 (2008). doi: 10.1109/HAVE.2008.4685293

- [9] Rantala, J., Raisamo, R., Lylykangas, J., Surakka, V., Raisamo, J., Salminen, K., Pakkanen, T. and Hippula, A., “Methods for Presenting Braille Characters on a Mobile Device with a Touchscreen and Tactile Feedback,” IEEE Transactions on Haptics, Vol. 2, No. 1, pp. 28�39 (2009). doi: 10.1109/TOH.2009.3

- [10] Gaudissart, V., Ferreira, S., Thillou, C. and Gosselin, B., “Mobile Reading Assistant for Blind People,” Proceedings of European Signal Processing Conference, pp. 538�544 (2005).

- [11] Neto, R. and Fonseca, N., “Camera Reading for Blind People,” Procedia Technology, Vol. 16, pp. 1200�1209 (2014). doi: 10.1016/j.protcy.2014.10.135

- [12] http://www.u-tran.com/index.php,visitedinMay(2016).

- [13] Noguchi, S. and Yamada, M., “Real-time 3D Page Tracking and Book Status Recognition for High-speed Book Digitization Based on Adaptive Capturing,” 2014 IEEEWinterConference on ApplicationsofComputer Vision (WACV), pp. 24�26 (2014).

- [14] Stamatopoulos, N., Gatos, B. and Kesidis, A., “Automatic Borders Detection of Camera Document Images,” Int. Workshop Camera-BasedDocument Anal. Recognition Conf. (CDBAR), pp. 71�78 (2007).

- [15] Gatos, B., Pratikakis, I. and Perantonis, S. J., “Adaptive Degraded Document Image Binarization,” Pattern Recognition, Vol. 39, pp. 317�327 (2006). doi: 10. 1016/j.patcog.2005.09.010

- [16] Otsu, N., “A Threshold Selection Method from Graylevel Histogram,” IEEE Transactions on Systems, Man, and Cybernetics, Vol. 9, pp. 62�66 (1976). doi: 10. 1109/TSMC.1979.4310076

- [17] Wahl, F. M., Wong, K. Y. and Casey, R. G., “Block Segmentation and Text Extraction in Mixed Text/Image Documents”, Computer Graphics and Image Processing, Vol. 20, pp. 375�390 (1982). doi: 10.1016/ 0146-664X(82)90059-4

- [18] Chang, F., Chen, C. J. and Lu, C. J., “A Linear-time Component-labeling Algorithm Using Contour Tracing Technique,” Computer Vision and Image Understanding, Vol. 93, No. 2, pp. 206�220 (2004). doi: 10. 1016/j.cviu.2003.09.002

- [19] Jiang, H., “Research on the Document Image Segmentation Based on the LDA Model,” Advances in Information Sciences and Service Sciences (AISS), Vol. 4, No. 3, pp. 12�18 (2012). doi: 10.4156/aiss.vol4.issue3.2

- [20] Xiaoying, Z., “Study on Document Image Segmentation Techniques Based on Improved Partial Differential Equations,” Journal of Convergence Information Technology (JCIT), Vol. 8, No. 5, pp. 821�831 (2013). doi: 10.4156/jcit.vol8.issue5.96

- [21] Stamatopoulos, N., Gatos, B., Pratikakis, I. and Perantonis, S. J., “Goal-oriented Rectification of Camerabased Document Images,” IEEE Trans. on Image Processing, Vol. 20, No. 4, pp. 910�920 (2011). doi: 10. 1109/TIP.2010.2080280

- [22] Gao, Y., Ai, X., Rarity, J. and Dahnoun, N., “Obstacle Detection with 3D CameraUsing U-VDisparity,” Proceedings of the 2011 7th International Workshop on Systems, Signal Processing and Their Applications (WOSSPA), pp. 239–242 (2011). doi: 10.1109/WOSSPA.2011.5931462

- [23] Huang, H. C., Hsieh, C. T. and Yeh, C. H., “An Indoor Obstacle Detection System Using Depth Information,” Sensors (Basel), Vol. 15, No. 10, pp. 27116�27141 (2015). doi: 10.3390/s151027116

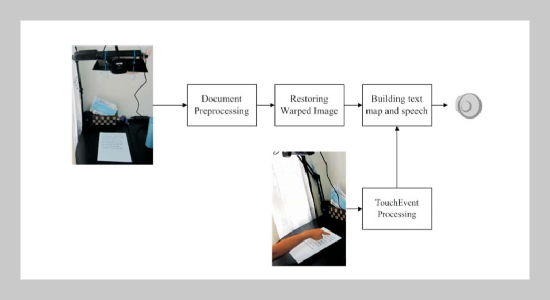

- [24] Hsieh, C. T., Lee, S. C. and Yeh, C. H., “Restoring Warped Document Image Based on Text Line Correction,” 2013 9th International Conference on Computing Technology and Information Management (ICCM 2013), Vol. 14, pp. 459�464 (2013).

- [25] Hsieh, C. T., Yeh, C. H., Liu, T. T. and Huang, K. C., “Non-visual Document Recognition for Blind Reading Assistant System,” 2013 9th International Conference on ComputingTechnology and Information Management (ICCM 2013), Vol. 14, pp. 453�458 (2013).

- [26] Tesseract: https://github.com/tesseract-ocr, visited in May (2016).

- [27] Text-to-speech:http://msdn.microsoft.com/en-us/library/ ms720163.aspx, visited in May (2016).