- [1] J. Pisane, S. Azarian, M. Lesturgie, and J. Verly, (2014) “Automatic target recognition for passive radar" IEEE Transactions on Aerospace and Electronic Systems 50(1): 371–392. DOI: 10.1109/TAES.2013.120486.

- [2] C. Sun, C. Zhang, and N. Xiong, (2020) “Infrared and visible image fusion techniques based on deep learn�ing: A review" Electronics 9(12): 2162. DOI: 10.3390/electronics9122162.

- [3] Y. Zou, X. Liang, and T. Wang, (2013) “Visible and in�frared image fusion using the lifting wavelet" TELKOM�NIKA Indonesian Journal of Electrical Engineering 11(11): 6290–6295.

- [4] Q. Zhang and X. Maldague, (2016) “An adaptive fusion approach for infrared and visible images based on NSCT and compressed sensing" Infrared Physics & Technol�ogy 74: 11–20. DOI: 10.1016/j.infrared.2015.11.003.

- [5] Y. Liu, X. Yang, R. Zhang, M. K. Albertini, T. Celik, and G. Jeon, (2020) “Entropy-based image fusion with joint sparse representation and rolling guidance filter" Entropy 22(1): 118. DOI: 10.3390/e22010118.

- [6] B. Cheng, L. Jin, and G. Li, (2018) “General fusion method for infrared and visual images via latent low-rank representation and local non-subsampled shearlet trans�form" Infrared Physics & Technology 92: 68–77. DOI: 10.1016/j.infrared.2018.05.006.

- [7] Y. Huang and K. Yao. “Multi-Exposure Image Fusion Method Based on Independent Component Analy�sis”. In: Proceedings of the 2020 International Conference on Pattern Recognition and Intelligent Systems. 2020, 1–6.

- [8] P. Li, J. Gao, J. Zhang, S. Jin, and Z. Chen, (2023) “Deep Reinforcement Clustering" IEEE Transactions on Multimedia 25: 8183–8193.

- [9] J. Gao, P. Li, A. A. Laghari, G. Srivastava, T. R. Gadekallu, S. Abbas, and J. Zhang, (2024) “Incomplete Multiview Clustering via Semidiscrete Optimal Trans�port for Multimedia Data Mining in IoT" ACM Transac�tions on Multimedia Computing, Communications and Applications 20(6): 158:1–158:20. DOI: 10.1145/3625548.

- [10] J. Gao, M. Liu, P. Li, J. Zhang, and Z. Chen, (2023) “Deep Multiview Adaptive Clustering With Semantic In�variance" IEEE Transactions on Neural Networks and Learning Systems: 1–14. DOI: 10.1109/TNNLS. 2023.3265699.

- [11] P. J. Burt and E. H. Adelson. “The Laplacian pyramid as a compact image code”. In: Readings in computer vision. Elsevier, 1987, 671–679.

- [12] W. Kong, Y. Lei, and H. Zhao, (2014) “Adaptive fu�sion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization" Infrared Physics & Technology 67: 161–172. DOI: 10.1016/j.infrared.2014.07.019.

- [13] Y. Liu, X. Chen, J. Cheng, H. Peng, and Z. Wang, (2018) “Infrared and visible image fusion with convo�lutional neural networks" International Journal of Wavelets, Multiresolution and Information Process�ing 16(03): 1850018.

- [14] Z. Wang, J. Wang, Y. Wu, J. Xu, and X. Zhang, (2021) “UNFusion: A unified multi-scale densely connected net�work for infrared and visible image fusion" IEEE Transac�tions on Circuits and Systems for Video Technology 32(6): 3360–3374.

- [15] Y. Liu, C. Miao, J. Ji, and X. Li, (2021) “MMF: A Multi�scale MobileNet based fusion method for infrared and visible image" Infrared Physics & Technology 119: 103894.

- [16] J. Ma, W. Yu, P. Liang, C. Li, and J. Jiang, (2019) “Fu�sionGAN: A generative adversarial network for infrared and visible image fusion" Information fusion 48: 11–26. DOI: 10.1016/j.inffus.2018.09.004.

- [17] J. Ma, H. Xu, J. Jiang, X. Mei, and X.-P. Zhang, (2020) “DDcGAN: A dual-discriminator conditional generative adversarial network for multi-resolution image fusion" IEEE Transactions on Image Processing 29: 4980–4995. DOI: 10.1109/TIP.2020.2977573.

- [18] H. Zhang, H. Xu, Y. Xiao, X. Guo, and J. Ma. “Re�thinking the image fusion: A fast unified image fu�sion network based on proportional maintenance of gradient and intensity”. In: Proceedings of the AAAI conference on artificial intelligence. 34. 07. 2020, 12797–12804.

- [19] S. Hao, T. He, B. An, X. Ma, H. Wen, and F. Wang, (2022) “VDFEFuse: A novel fusion approach to infrared and visible images" Infrared Physics & Technology 121: 104048. DOI: 10.1016/j.infrared.2022.104048.

- [20] X. Liu, H. Gao, Q. Miao, Y. Xi, Y. Ai, and D. Gao, (2022) “MFST: Multi-modal feature self-adaptive trans�former for infrared and visible image fusion" Remote Sensing 14(13): 3233.

- [21] W. Tang, F. He, Y. Liu, Y. Duan, and T. Si, (2023) “Dat�fuse: Infrared and visible image fusion via dual attention transformer" IEEE Transactions on Circuits and Sys�tems for Video Technology:

- [22] H.-Y. Lee, H.-Y. Tseng, J.-B. Huang, M. Singh, and M.-H. Yang. “Diverse image-to-image translation via disentangled representations”. In: Proceedings of the European conference on computer vision (ECCV). 2018, 35–51.

- [23] H.-Y. Lee, H.-Y. Tseng, Q. Mao, J.-B. Huang, Y.-D. Lu, M. Singh, and M.-H. Yang, (2020) “Drit++: Diverse image-to-image translation via disentangled representa�tions" International Journal of Computer Vision 128: 2402–2417.

- [24] L. Tran, X. Yin, and X. Liu. “Disentangled representa�tion learning gan for pose-invariant face recognition”. In: Proceedings of the IEEE conference on computer vision and pattern recognition. 2017, 1415–1424.

- [25] D. Yang, S. Huang, H. Kuang, Y. Du, and L. Zhang. “Disentangled representation learning for multi�modal emotion recognition”. In: Proceedings of the 30th ACM International Conference on Multimedia. 2022, 1642–1651.

- [26] Q. Wang, Y. Zhang, Y. Zheng, P. Pan, and X.-S. Hua, (2022) “Disentangled representation learning for text�video retrieval" arXiv preprint arXiv:2203.07111:

- [27] P. Li, A. A. Laghari, M. Rashid, J. Gao, T. R. Gadekallu, A. R. Javed, and S. Yin, (2023) “A Deep Multimodal Adversarial Cycle-Consistent Network for Smart Enterprise System" IEEE Transactions on In�dustrial Informatics 19(1): 693–702. DOI: 10.1109/TII.2022.3197201.

- [28] H. Xu, X. Wang, and J. Ma, (2021) “DRF: Disentangled representation for visible and infrared image fusion" IEEE Transactions on Instrumentation and Measurement 70: 1–13.

- [29] Y. Gao, S. Ma, and J. Liu, (2022) “DCDR-GAN: A densely connected disentangled representation generative adversarial network for infrared and visible image fu�sion" IEEE Transactions on Circuits and Systems for Video Technology 33(2): 549–561.

- [30] M. Caron, I. Misra, J. Mairal, P. Goyal, P. Bojanowski, and A. Joulin, (2020) “Unsupervised learning of visual features by contrasting cluster assignments" Advances in neural information processing systems 33: 9912–9924.

- [31] URL: https://figshare.com/articles/dataset/TNO_Image_Fusion_Dataset/1008029.

- [32] URL: https://www.flir.com/oem/adas/adas-dataset-form/.

- [33] J. W. Roberts, J. A. Van Aardt, and F. B. Ahmed, (2008) “Assessment of image fusion procedures using entropy, image quality, and multispectral classification" Journal of Applied Remote Sensing 2(1): 023522.

- [34] G. Qu, D. Zhang, and P. Yan, (2002) “Information mea�sure for performance of image fusion" Electronics letters 38(7): 1.

- [35] Y. Han, Y. Cai, Y. Cao, and X. Xu, (2013) “A new image fusion performance metric based on visual information fidelity" Information fusion 14(2): 127–135.

- [36] Y. J. Rao, (1997) “In-fibre Bragg grating sensors" Mea�surement Science & Technology 8(4): 355–375.

- [37] G. Cui, H. Feng, Z. Xu, Q. Li, and Y. Chen, (2015) “De�tail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decom�position" Optics Communications 341: 199–209.

- [38] A. M. Eskicioglu and P. S. Fisher, (1995) “Image quality measures and their performance" IEEE Transactions on communications 43(12): 2959–2965.

- [39] H. Li and X.-J. Wu, (2018) “DenseFuse: A fusion ap�proach to infrared and visible images" IEEE Transactions on Image Processing 28(5): 2614–2623.

- [40] X. Luo, Y. Gao, A. Wang, Z. Zhang, and X.-J. Wu, (2021) “IFSepR: A general framework for image fusion based on separate representation learning" IEEE Trans�actions on Multimedia:

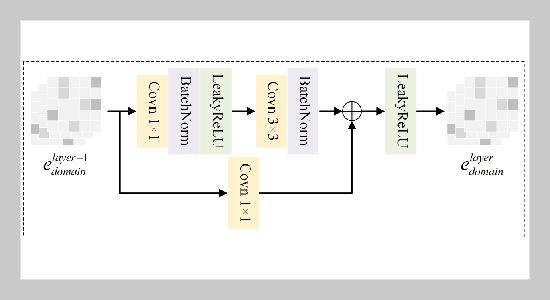

- [41] H. Li, X.-J. Wu, and J. Kittler, (2021) “RFN-Nest: An end-to-end residual fusion network for infrared and visible images" Information Fusion 73: 72–86.

- [42] H. Xu, J. Ma, J. Jiang, X. Guo, and H. Ling, (2020) “U2Fusion: A unified unsupervised image fusion network" IEEE Transactions on Pattern Analysis and Machine Intelligence 44(1): 502–518.

- [43] D. Wang, J. Liu, X. Fan, and R. Liu, (2022) “Unsu�pervised misaligned infrared and visible image fusion via cross-modality image generation and registration" arXiv preprint arXiv:2205.11876:

- [44] L. Tang, J. Yuan, H. Zhang, X. Jiang, and J. Ma, (2022) “PIAFusion: A progressive infrared and visible image fu�sion network based on illumination aware" Information Fusion 83: 79–92.

- [45] L. Tang, J. Yuan, and J. Ma, (2022) “Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network" Information Fusion 82: 28–42. DOI: 10.1016/j.inffus.2021.12.004.

- [46] Z. Zhu, X. Yang, R. Lu, T. Shen, X. Xie, and T. Zhang, (2022) “Clf-Net: Contrastive learning for infrared and visible image fusion network" IEEE Transactions on Instrumentation and Measurement 71: 1–15. DOI: 10.1109/TIM.2022.3203000.

- [47] L. Tang, H. Zhang, H. Xu, and J. Ma, (2023) “Rethink�ing the necessity of image fusion in high-level vision tasks: A practical infrared and visible image fusion network based on progressive semantic injection and scene fidelity" Information Fusion: 101870. DOI: 10.1016/j.inffus.2023.101870.